#Kafka cdc how to#

In this article, we will explain, step-by-step, how to implement a PoC (Proof-of-Concept) for testing the integration between an Oracle database with Kafka through GoldenGate technology. In order to grant this feature we can write (always transactionally) the Kafka message in an ad-hoc monitored table by GoldenGate that, through its Kafka Connect handler, will publish an "INSERT" event storing the original Kafka message to unwrap. We need to grant that a Kafka message is published only if a database transaction completes successfully. In other words, we can implement data pipelines from legacy applications without changing them. Given a legacy monolithic application having an Oracle database as single source of truth, it should be possible to create a real-time stream of update events just by monitoring the changes of relevant tables. This integration can be very interesting and useful for these kind of use-cases: So, given an Oracle database, any DML operation (INSERT, UPDATE, DELETE) inside a business transaction completed succesfully will be converted in a Kafka message published in real-time.

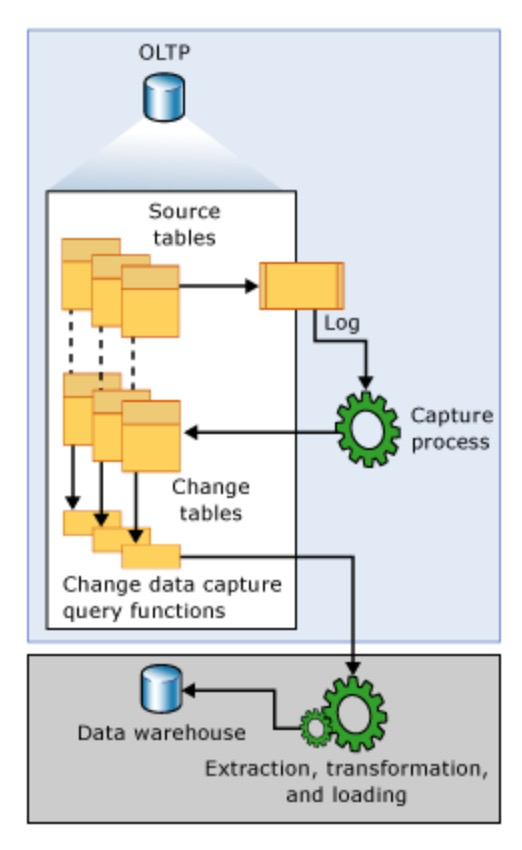

Oracle provides a Kafka Connect handler in its Oracle GoldenGate for Big Data suite for pushing a CDC (Change Data Capture) event stream to an Apache Kafka cluster.

0 kommentar(er)

0 kommentar(er)